Reviews

Who Is Liable When A Self-Driving Car Causes A Crash?

When self-driving vehicles first emerged, they seemed like science fiction, but now they are part of reality. While they may not be as common as expected, they are becoming increasingly used as a transportation option in both private and commercial settings.

One of the reasons self-driving cars aren’t as common as you might think is the safety issues. It will take longer for people to trust them. There is also the question of who would be responsible if a self-driving car were involved in a crash. This is a crucial question for the victims of self-driving car crashes. This helps victims understand who pays for medical bills, property damage, and other expenses in legal claims when something goes wrong.

What It Really Means When a Car Drives Itself

Most people think a self-driving car does it all by itself, no steering wheel necessary, and no one watching the road. That is an oversimplification of what an autonomous vehicle does. Not every car that appears to drive itself is considered legally autonomous, and this makes a big difference when there’s a crash.

There are varying levels of automation in vehicles, and those levels determine who’s really driving. The industry scale is zero to five. At the zero level, there would be no automated driving at all. In levels one and two, the car might help you steer or set the speed, but you are still liable. The car can do a lot more at level three, but the human driver is meant to be sitting there, ready to take over. These are the types of systems you see in many newer vehicles today.

Simply because a car can keep to its lane or follow traffic without constant human input does not make it autonomous. Many of the systems that appear sophisticated come with requirements to pay attention and remain engaged. So when something goes wrong, the driver often winds up sharing the blame, even if the technology was engaged at the time.

Level four is what most people think of as full autonomy, where you can actually have a car drive itself without a person at the wheel. That means that the vehicle can drive itself, depending on where it is and what the conditions are. Level five would mean total control in all situations, from city streets to highways to bad weather. We are nowhere near that, but we now have test vehicles with that capability being used in trials.

With these different levels of automation, the distinction between humans and machines doesn’t always remain so distinct. The big challenge that investigators have is to determine whether the car’s self-driving system was engaged or in control when the crash occurred.

They also need to know what the driver was doing at the time of the crash and whether the vehicle provided any warnings before the impact. Another key question is how much time (if any) the driver had to take over. Even a minor detail can turn an entire case on its head. This is why you need specialized legal knowledge and experience to handle such cases.

Why the Human Behind the Wheel Is Not Always Off the Hook

Just because a car has self-driving features does not mean the person inside gets to relax completely. That is one of the biggest misunderstandings about this technology. Even with advanced systems, the person in the driver’s seat is usually still responsible for what the vehicle does.

As we have already established, most of these vehicles are not fully autonomous, and this is important in such cases. Most autonomous vehicles can help with certain things, like controlling speed or staying in a lane, but the driver should be alert. If something unexpected happens and the driver is not paying attention, they can be blamed for the crash. It does not matter if the car was doing most of the work. The law still expects the human to step in when needed.

There are cases where the car gives a warning. Maybe it senses something unusual or decides the road is too complicated for the system to handle alone. That warning is not a suggestion. It means the driver needs to take over right away. If the driver is checking their phone or not looking at the road, and something goes wrong, it can be hard to argue they were not at fault.

You might have seen videos of drivers sleeping while the car travels down the highway, or sitting in the backseat like no one needs to be in charge. When those situations lead to crashes, the fault almost always lands on the person, not the car.

Until we reach a point where no human is needed at all, this is how it will work. These cars are smart, but they are not in full control. The driver still matters. And when things go wrong, being behind the wheel still comes with real responsibility.

When the Car Manufacturer Is Responsible for What Went Wrong

Sometimes, the fault is not with the driver; it is the vehicle, or more accurately, the company that built it. These cars depend on computer code, sensors, decision-making systems, and dozens of design choices. When something breaks down and causes harm, you have to ask whether the fault lies with the people who made the machine.

Think about what these systems are expected to do. A self-driving car has to detect people, read traffic signs, react to weather, respond to moving objects, and make instant decisions. If any one of those decisions fails, a crash can happen. So what went wrong? Was it the hardware? Did the software freeze up or misjudge the situation? Was the sensor misaligned or the braking system delayed? These are not small errors. They are often signs that the system was not designed or tested well enough.

Car makers are supposed to know better. If they rush something to market or leave bugs in the software, that is not just a technical problem. It is a safety issue. There have been cases where a vehicle did not recognize a bicycle, or thought a trailer was the sky, or failed to brake because the lighting confused the camera. If the driver trusted the technology and followed the rules, but the system failed anyway, then the responsibility could shift to the manufacturer.

It gets even trickier with vehicles that update themselves. Some cars get new software over the internet, which means a change could be made without the driver knowing what was altered. If an update leads to a bad decision and someone gets hurt, is it still the driver’s fault? Or does it go back to the team that sent the update?

There is also the problem of trust. Companies advertise these features, highlight their convenience, and call their cars smart or safe. However, if the car crashes while doing exactly what the company said it could do, then what? It is fair for people to believe the system will work. And when it does not, it is not just unfortunate; it might be negligent.

Laws are still catching up to all this, but the idea is not new. If a product harms someone because it was poorly made or poorly explained, the company behind it can be held responsible. That does not change just because the product is intelligent or connected to the internet.

For anyone injured in one of these crashes, it is not always clear what happened at first, but over time, when the evidence is examined and the flaws come to light, the truth gets harder to ignore. If the vehicle failed in a way that should never have happened, that failure may point straight back to the manufacturer.

How Third Party Technology Can Complicate Fault

Not everything inside a self-driving car is made by the same company. Parts come from different sources. So does the software. Behind what seems like a single system is often a complicated mix of equipment built by people at different companies, in different locations, often working under separate contracts. That becomes a real issue when a crash happens and someone gets hurt. Because then the question is which piece failed.

Let us say the car takes a wrong turn or runs a red light. Maybe it did not read the sign correctly. Maybe the navigation system gave the wrong direction. That software might not have been built by the automaker. It might have come from a third party. Same with the visual recognition system. Same with the mapping software. Same with the over-the-air update that changed the behavior of the vehicle the night before. Now the crash is not about one company’s mistake. It might involve two or three.

This is more common than most people think. Many companies specialize in one function. One team builds the core driving system. Another handles GPS. Another works on pedestrian tracking. The car company brings those pieces together. But when something goes wrong, it becomes hard to tell where one responsibility ends and another begins.

These layers of software are not always visible to the driver, but when the car behaves strangely, it matters. Because if the automaker relied on an outside system and that system caused the crash, the liability might shift.

Sometimes the automaker blames the vendor. The vendor blames the integration. The driver gets caught in the middle. Meanwhile, someone still has to pay the bills resulting from the accident. That is what makes these cases complex. It is not just one car and one driver; there are layers of responsibility. Lawyers often have to trace decisions through the code, through internal emails, and through update logs. That takes time. It also takes access that the average person does not have.

From the outside, the car looks like one product, but it is a mix of different companies making different promises. When those promises break, sorting out who is accountable can take longer than anyone expects.

The Vehicle Owner’s Role in Keeping the Car Safe to Drive

Most people do not think about who owns a car after a crash. However, the person who owns the vehicle holds a certain level of responsibility, even when the car can drive itself.

It is easy to forget that these cars, even the most advanced ones, still need maintenance. They need updates, working sensors, clean lenses, and properly calibrated systems. If the owner ignores those things, skips maintenance, or disables key features, that decision can come back to haunt them. And when it does, it does not matter how smart the car was supposed to be. It was not set up to work the way it should have, and that matters in court.

A car can fail to stop in time because the camera was blocked or dirty, or it can drift out of its lane because a sensor was never reset after a minor bumper repair. These are real-world examples of negligent maintenance. In cases like these, the focus lands on the person who was responsible for making sure the vehicle was ready for the road. If that person ignored an alert or failed to schedule service, that failure can become a big part of the case.

There are also people who try to outsmart the system. They install workarounds, cover up alerts, or use tricks to keep the car moving without supervision. It might seem clever until there is a crash. Once that happens, those decisions turn into evidence. It is hard to claim you did nothing wrong when the logs say otherwise.

This is especially true for businesses that own fleets. A delivery company or ride service running a group of semi-autonomous cars has a duty to keep every one of them safe and up to date. If they cut corners to save money or skip software patches to avoid downtime, they open themselves up to serious legal problems. When a crash follows, those choices are not just bad decisions. They can become the reason a claim or lawsuit is filed.

Self-driving technology is powerful, but it is not a replacement for good judgment. These cars are still machines. They rely on humans to keep them in good condition, whether that means checking tire pressure or installing the latest safety update. When that step gets skipped and someone gets hurt, the consequences fall on whoever was responsible for taking care of the car.

How Insurance Companies Decide Who Pays After a Crash

Insurance used to be simple. A person caused a crash, and their insurance paid. But when self-driving cars are involved, everything feels less clear. When a vehicle can steer, brake, and make decisions on its own, who is actually responsible when something goes wrong? The answer is not always the driver, and insurance companies know that, which is why these cases get far more complicated than a normal fender bender.

The first thing insurers look at is how much control the vehicle had at the moment of the collision. They might pull the data from the car to see whether the self-driving system was active, whether it issued any warnings, and how the driver responded. Many vehicles store this data, making it an important piece of evidence in determining who pays.

If the self-driving system asked the driver to take over and the driver did nothing, insurance companies might hold the human at fault. They will ask questions like, was the driver distracted? Were they looking at the road or staring at a phone? Did they ignore alerts? Auto manufacturers often warn that drivers must remain attentive, even when driving assistance is active. Insurance companies love pointing to those warnings when trying to push liability back onto the person behind the wheel.

In some cases, the self-driving system behaves in a way no reasonable person could predict. The car may misread a traffic sign, fail to detect a pedestrian, or apply the brakes too late. If the driver was paying attention and the system still made a bad decision, the insurance company may look beyond the driver and examine whether the technology failed. That is when product liability enters the picture and things become even more complex.

There is also the issue of software updates. Many self-driving vehicles update over the air. Did the latest update put the driver in a situation they did not expect? That can open the door to holding the automaker or software provider partially responsible.

Ownership of the vehicle matters too. Some self-driving cars are privately owned, while others are part of a fleet or ride-hailing service. If a company owns the vehicle and fails to maintain sensors or install required updates, they might be liable. If the car was leased, insurance investigators might check whether the maintenance contract was followed.

Insurance companies have started creating special teams to handle autonomous vehicle claims because these crashes take longer to investigate. They need technical experts to interpret the data logs. They sometimes hire accident reconstruction specialists who understand machine learning behavior. They may even bring in engineers to explain why the car made a certain choice in the seconds before the crash. For everyday drivers, such cases can feel overwhelming, and that is why getting legal help might become important.

Why the Law Still Has No Clear Answer for Many Autonomous Accidents

Traffic laws were built on the assumption that a human is always in control. That assumption no longer holds true. A vehicle at level one or two automation still relies on a human. A car at level three can do most of the work, but the person inside is expected to take over when needed. Level four can drive on its own in specific areas. Level five would need no human intervention at all. These levels exist in engineering standards, but the law does not treat each level differently in a clear way, which leads to confusion after a crash.

If the system were only assisting, the law often still sees the human as the driver. If the system were fully controlling the car, it would become much harder to assign fault. Did the software make a wrong decision? Did the sensors fail? Did the company test the feature well enough? These are product liability questions rather than traditional traffic law questions.

Courts rarely see cases like this go to trial. Many self-driving crashes are settled quietly out of court because companies want to avoid public scrutiny and legal precedent. That means there are very few rulings that clearly define who is responsible. Without precedent, lawyers and judges have little guidance. Each case becomes a battle of experts and interpretations.

Another challenge is the difference between state and federal rules. Some states have begun passing laws regarding autonomous vehicles, but most focus on permitting and testing rather than liability. The federal government has issued guidelines and safety frameworks, but they are not binding laws. So in many places, the decision about who is responsible after a crash depends on traditional negligence standards that do not fit well with machine decision-making.

Then there is the question of warnings and instructions. If a company tells drivers that they must stay alert and ready to take over, can the company be held responsible when the driver does not? What if the vehicle gave no warning? What if the system failed silently? What if the car made a decision a human could not physically respond to in time? The law does not clearly define the limits of human responsibility in a highly automated system.

Things get even more complicated with learning systems. Some self-driving software improves over time by learning from data. If the car learns a behavior that ends up causing a crash, who made the decision? The engineers who wrote the original code? The company that approved the training data? The algorithm? Traditional liability law was never designed for machines that can change behavior long after they leave the factory.

There are also ethical questions. Should a self-driving car follow traffic laws strictly or bend them in emergencies? Should it prioritize the safety of its passengers or pedestrians? Different companies might program different answers, and the law has not decided which is correct. If a car makes a split-second choice based on programmed ethics, lawyers must decide whether that choice was reasonable.

Until courts and lawmakers address these gaps, the law will lag behind the technology. Autonomous vehicles will continue to operate in a legal gray area where responsibility is debated case by case. Without clearer rules, victims may struggle to know their rights, and companies may escape accountability.

How an Experienced Attorney Can Help in Self-Driving Accident Cases

When someone is involved in a crash with a self-driving vehicle, it is easy to assume the process works the same as any other accident. File a claim, talk to insurance, wait for answers. Unfortunately, cases involving autonomous technology are rarely that simple. The truth is that these crashes often require legal help because they involve layers of responsibility, complex data, and companies that are prepared to defend themselves.

An experienced Stockton personal injury lawyer who understands self-driving systems knows where to look for answers. The first step is figuring out who was in control at the time of the crash. Was the system engaged? Was the human driver supposed to take over? Did the car issue a warning? These details are not based on witness statements alone. They come from data logs stored inside the vehicle. Only a trained professional knows how to obtain and interpret that data before it is overwritten or lost.

Figuring out who is at fault is not always as simple as pointing to the driver. What if the car were controlling the speed or made a turn on its own? What if the software read the road wrong or a sensor failed to notice someone? Sometimes, the car even updates itself without the driver knowing. If the system changed and caused the mistake, is it fair to blame the person inside? At that point, the real question becomes whether the technology worked safely and whether the company behind it did its job.

-

Health4 days ago

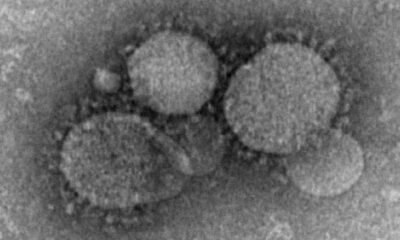

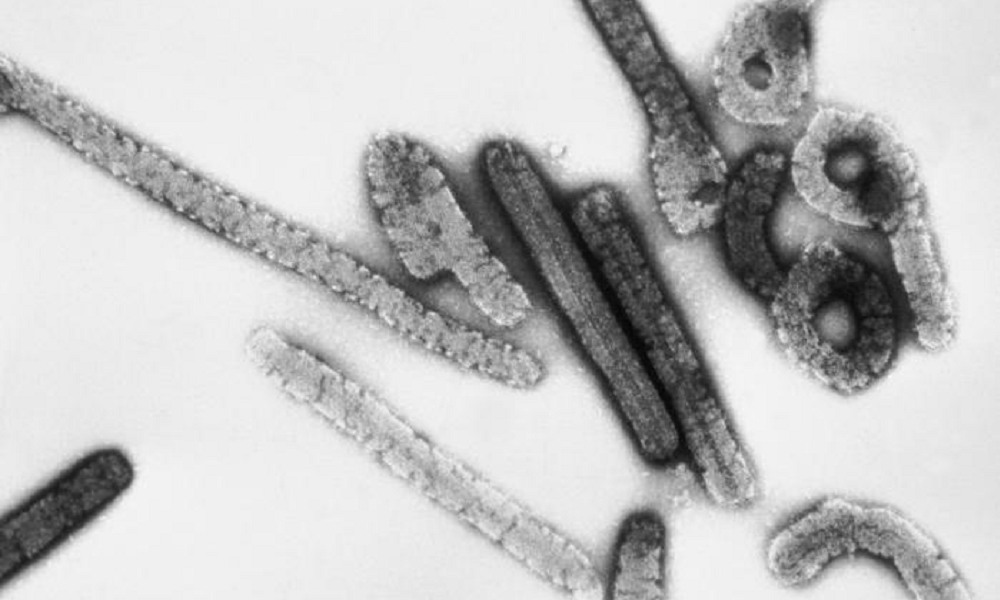

Health4 days agoFrance confirms 2 MERS coronavirus cases in returning travelers

-

Health6 days ago

Health6 days ago8 kittens die of H5N1 bird flu in the Netherlands

-

Entertainment4 days ago

Entertainment4 days agoJoey Valence & Brae criticize DHS over unauthorized use of their music

-

Legal1 week ago

Legal1 week ago15 people shot, 4 killed, at birthday party in Stockton, California

-

US News6 days ago

US News6 days agoFire breaks out at Raleigh Convention Center in North Carolina

-

US News1 day ago

US News1 day agoMagnitude 7.0 earthquake strikes near Alaska–Canada border

-

Health5 days ago

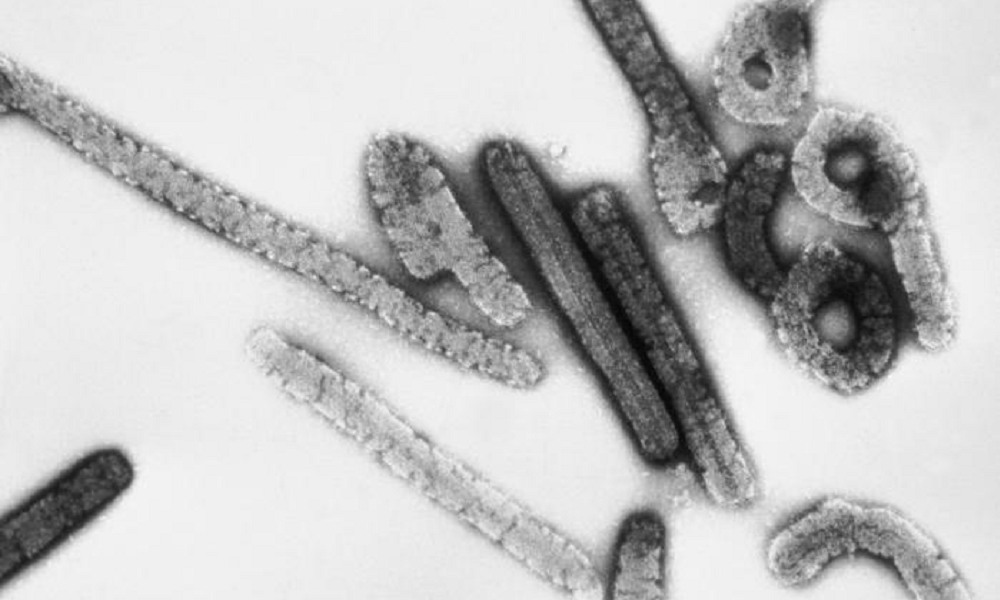

Health5 days agoEthiopia reports new case in Marburg virus outbreak

-

Legal3 days ago

Legal3 days agoWoman detained after firing gun outside Los Angeles County Museum of Art