Reviews

Best Model Context Protocol Tools and Solutions in 2025

The Model Context Protocol (MCP) is an open standard for connecting AI assistants to the systems where data lives, including content repositories, business tools, and development environments. MCP is a universal, open standard designed to bridge AI models with the places where your data and tools live, making it much easier to provide context to AI systems. As organizations increasingly adopt AI-powered workflows, the need for seamless integration between AI models and enterprise data has never been more critical.

Even the most sophisticated models are constrained by their isolation from data—trapped behind information silos and legacy systems. Every new data source requires its own custom implementation, making truly connected systems difficult to scale. This is where the model context protocol mcp emerges as the transformative solution that enables AI assistants to retrieve documents from knowledge bases, query databases, or call external APIs through a unified protocol.

K2view MCP Server – Our top pick

K2view provides a high-performance MCP server designed for real-time delivery of multi-source enterprise data to LLMs. Using entity-based data virtualization tools, it enables granular, secure, and low-latency access to operational data across silos. What sets K2view apart is its enterprise-grade approach to data security and performance optimization.

K2view’s MCP implementation excels in handling complex enterprise data scenarios where traditional solutions struggle. Awesome MCP servers securely connect GenAI apps with enterprise data sources. They enforce data policies and deliver structured data with conversational latency, enhancing LLM response accuracy and personalization while maintaining governance. The platform’s entity-based virtualization ensures that data remains consistent across different systems while maintaining strict access controls.

For enterprises requiring robust data governance and real-time performance, K2view represents the gold standard in MCP server implementations. Its ability to handle multi-source data integration while maintaining enterprise security standards makes it the preferred choice for production environments.

Anthropic’s Official MCP Servers

To help developers start exploring, we’re sharing pre-built MCP servers for popular enterprise systems like Google Drive, Slack, GitHub, Git, Postgres, and Puppeteer. These official implementations serve as reliable starting points for organizations beginning their MCP journey.

Anthropic’s servers benefit from direct integration with Claude Desktop and comprehensive documentation. All Claude.ai plans support connecting MCP servers to the Claude Desktop app. Claude for Work customers can begin testing MCP servers locally, connecting Claude to internal systems and datasets. The official servers maintain high reliability standards and receive regular updates aligned with protocol evolution.

Atlassian’s Remote MCP Server

Introducing Atlassian’s Remote MCP server. Jira and Confluence Cloud customers can interact with their data directly from Claude, Anthropic’s AI assistant. This enterprise-focused solution addresses the specific needs of teams using Atlassian’s productivity suite.

With Atlassian’s Remote MCP Server, teams can access their Jira tickets and Confluence documentation conveniently within Claude. This means less context switching, faster decision-making, and ultimately, more time spent on meaningful work. The remote hosting approach ensures enterprise-grade security while eliminating local setup complexity.

Playwright MCP for Test Automation

Microsoft has introduced Playwright MCP (Model Context Protocol), a server-side enhancement to its Playwright automation framework designed to facilitate structured browser interactions by Large Language Models (LLMs). Unlike traditional UI automation that relies on screenshots or pixel-based models, Playwright MCP uses the browser’s accessibility tree to provide a deterministic, structured representation of web content.

By enabling LLMs to interact with web pages using structured data instead of visual cues, this protocol improves the reliability and clarity of automated tasks such as navigation, form-filling, and content extraction. It also supports automated test generation, bug reproduction, and accessibility checks directly from natural language inputs.

Notion MCP Server

This MCP server exposes Notion data (pages, databases, tasks) as context to LLMs, allowing AI agents to reference workspace data in real-time. It’s a practical tool for knowledge assistants operating within productivity tools. The Notion integration proves particularly valuable for teams that centralize their documentation and project management within Notion’s ecosystem.

The server enables AI assistants to access and manipulate Notion content seamlessly, making it ideal for knowledge management scenarios where teams need AI assistance with existing documentation workflows.

Supabase MCP Server

The Supabase MCP Server bridges edge functions and Postgres to stream contextual data to LLMs. It’s built for developers who want server-less, scalable context delivery, based on user or event data. This solution appeals to development teams building modern applications with real-time data requirements.

The serverless architecture ensures cost efficiency while maintaining the scalability needed for production applications. Its integration with Postgres provides robust data management capabilities for complex AI-powered applications.

Pinecone Vector Database MCP

Built on Pinecone’s vector database, this MCP server supports fast, similarity-based context retrieval. It’s optimized for applications that require LLMs to recall semantically relevant facts or documents. This solution excels in scenarios requiring sophisticated semantic search capabilities.

Vector databases like Pinecone enable AI applications to find contextually relevant information based on meaning rather than exact keyword matches, making them essential for advanced AI-powered search and retrieval systems.

Community-Built OpenAPI MCP Server

A community-built OpenAPI MCP server designed to enable transparent, standardized access to LLM context. It demonstrates interoperability between LLM tools and open data resources. This open-source solution provides flexibility for organizations with diverse API requirements.

The OpenAPI integration allows for standardized access to various web services and APIs, making it valuable for organizations that need to connect AI systems with existing API infrastructure.

Instead of maintaining separate connectors for each data source, developers can now build against a standard protocol. As the ecosystem matures, AI systems will maintain context as they move between different tools and datasets, replacing today’s fragmented integrations with a more sustainable architecture. The Model Context Protocol represents a fundamental shift toward standardized AI-data integration, enabling organizations to build more robust and maintainable AI-powered systems.

-

World1 week ago

World1 week agoEthiopian volcano erupts for first time in thousands of years

-

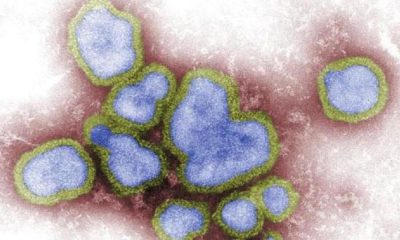

Health1 day ago

Health1 day ago8 kittens die of H5N1 bird flu in the Netherlands

-

Legal6 days ago

Legal6 days agoUtah Amber Alert: Jessika Francisco abducted by sex offender in Ogden

-

US News5 days ago

US News5 days agoExplosion destroys home in Oakland, Maine; at least 1 injured

-

Health6 days ago

Health6 days agoMexico’s September human bird flu case confirmed as H5N2

-

Legal3 days ago

Legal3 days ago15 people shot, 4 killed, at birthday party in Stockton, California

-

World6 days ago

World6 days agoWoman killed, man seriously injured in shark attack on Australia’s NSW coast

-

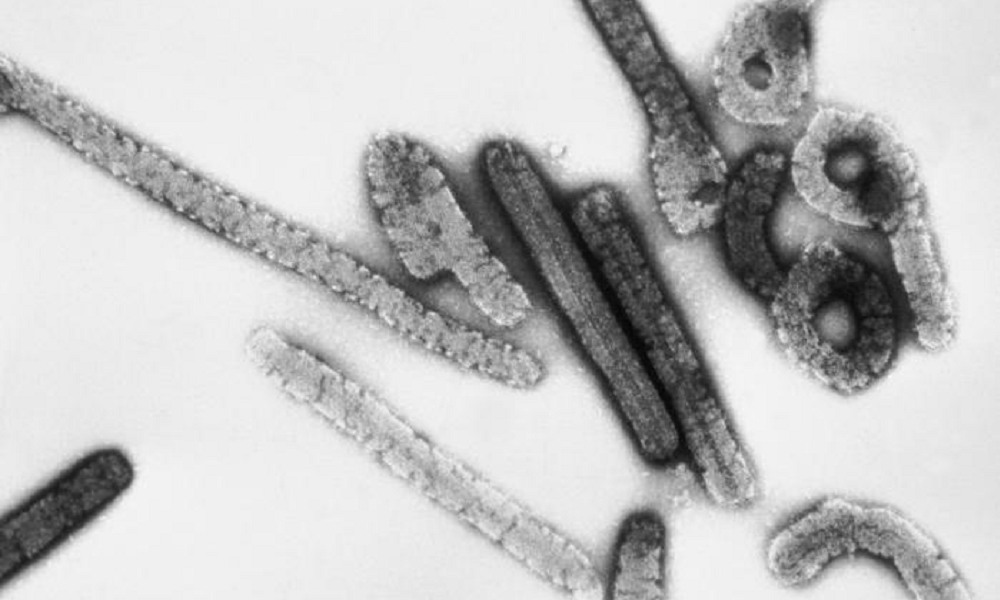

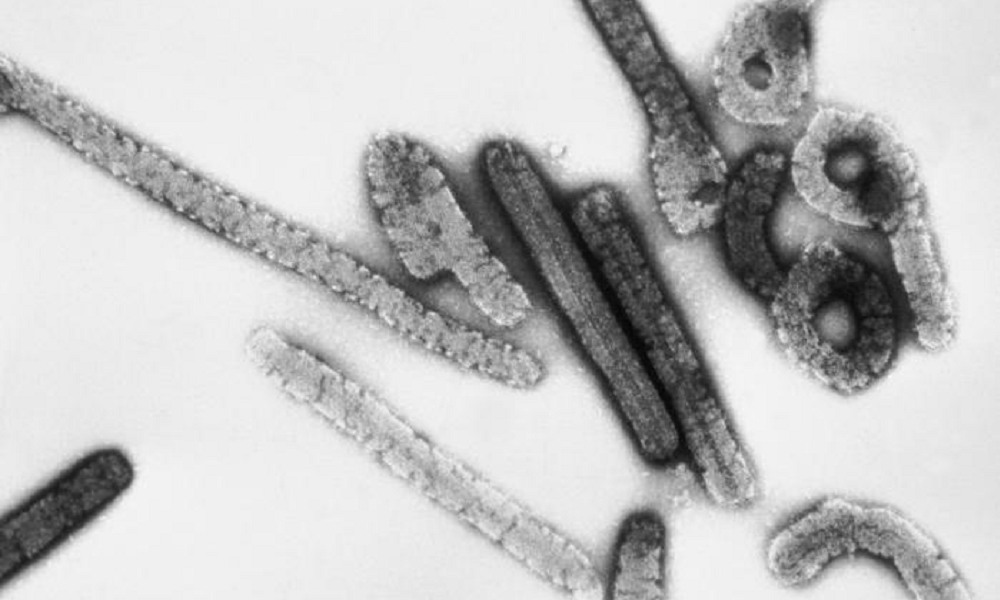

Health5 days ago

Health5 days agoMarburg outbreak in Ethiopia rises to 12 cases and 8 deaths